In just a few minutes, AI easily passed the CFA Level 3 exam

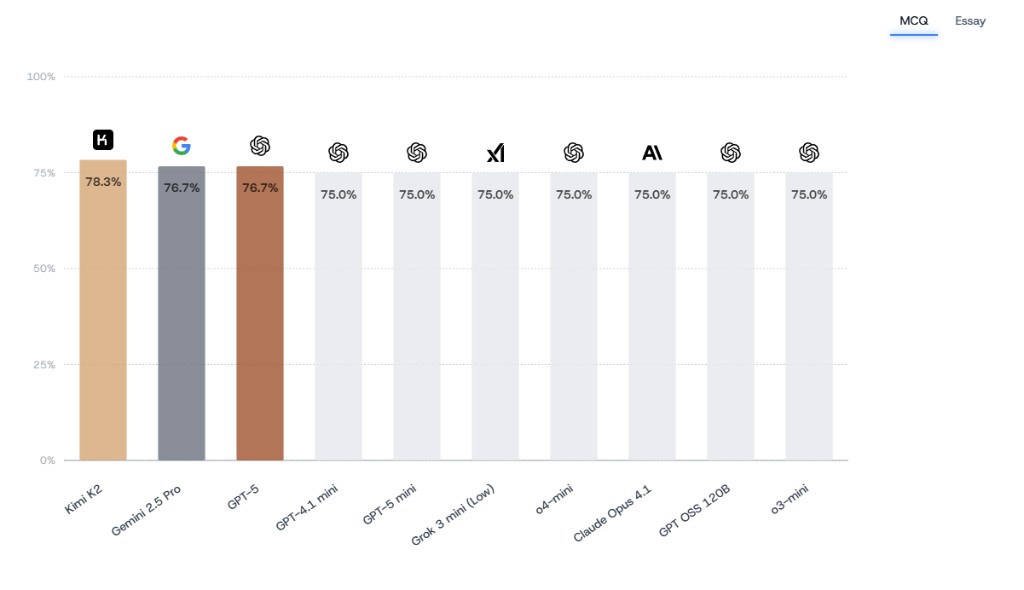

Recent research shows that multiple AI models can pass the prestigious CFA Level 3 exam in just a few minutes, while humans typically require years of study. Among them, Gemini 2.5 Pro performed the best overall, and the domestic KIMI K2 achieved a correct answer rate of 78.3% on multiple-choice questions. Despite the impressive exam performance of AI, experts believe that it still struggles to fully replace human finance professionals in areas such as contextual understanding

Recent research shows that multiple AI models can pass the prestigious CFA Level III exam in just a few minutes, while humans typically require several years and about 1,000 hours of study to complete it.

Researchers from New York University's Stern School of Business and the AI wealth management platform GoodFin tested 23 large language models and found that cutting-edge reasoning models, including o4-mini, Gemini 2.5 Pro, and Claude Opus, were able to successfully pass the CFA Level III mock exam.

These models employed "chain-of-thought prompting" techniques, effectively addressing the essay questions that previously posed challenges for AI. GoodFin founder and CEO Anna Joo Fee stated:

I believe this technology absolutely has the potential to change the entire industry.

AI Models Break Through CFA Level III Exam Barriers

A study conducted two years ago found that AI models could pass the CFA Level I and II exams but faced significant obstacles in the essay section of Level III.

The latest research confirms that the rapid development of AI technology has overcome this critical bottleneck.

The research team conducted comprehensive testing on 23 large language models, focusing particularly on whether the AI models possessed the specialization and high-risk analytical reasoning capabilities required for professional financial decision-making, in order to assess their ability to handle multiple-choice and essay questions in the CFA Level III mock exam.

The results indicated that reasoning models significantly outperformed traditional non-reasoning models when dealing with complex financial problems. Notably, cutting-edge reasoning models that utilized "chain-of-thought prompting" successfully passed the relevant exam tests.

Among them, Gemini 2.5 Pro achieved the highest score of 3.44 in the essay question grading, while ranking first with a score of 2.1 in overall performance (multiple-choice and essay questions).

It is worth noting that the domestic KIMI K2 model performed best in multiple-choice questions, achieving a correct rate of 78.3%, surpassing Google's Gemini 2.5 Pro and GPT-5.

The research employed three prompting strategies: zero-shot, self-consistency, and self-discovery, with the self-consistency strategy achieving the best performance score of 73.4%.

In the cost-effectiveness analysis, Llama 3.1 8B Instant received the best cost efficiency score of 5468, while Palmyra Fin became the fastest model with an average response time of 0.3 seconds.

Despite AI's excellent performance in standardized tests, industry experts believe there are still limitations to completely replacing human financial professionals. Fee emphasized:

Despite AI's excellent performance in standardized tests, industry experts believe there are still limitations to completely replacing human financial professionals. Fee emphasized:

In areas such as contextual understanding and intent judgment, machines currently struggle to assess accurately. This is precisely where humans have the advantage, being able to understand clients' body language and various cues