MU (Trans): GPM will continue to expand, but the pace will moderate.

Dolphin Research's Trans of MU FY2026 Q1 earnings call follows. For our First Take, see 'MU: AI Ignites Memory Upcycle?'.

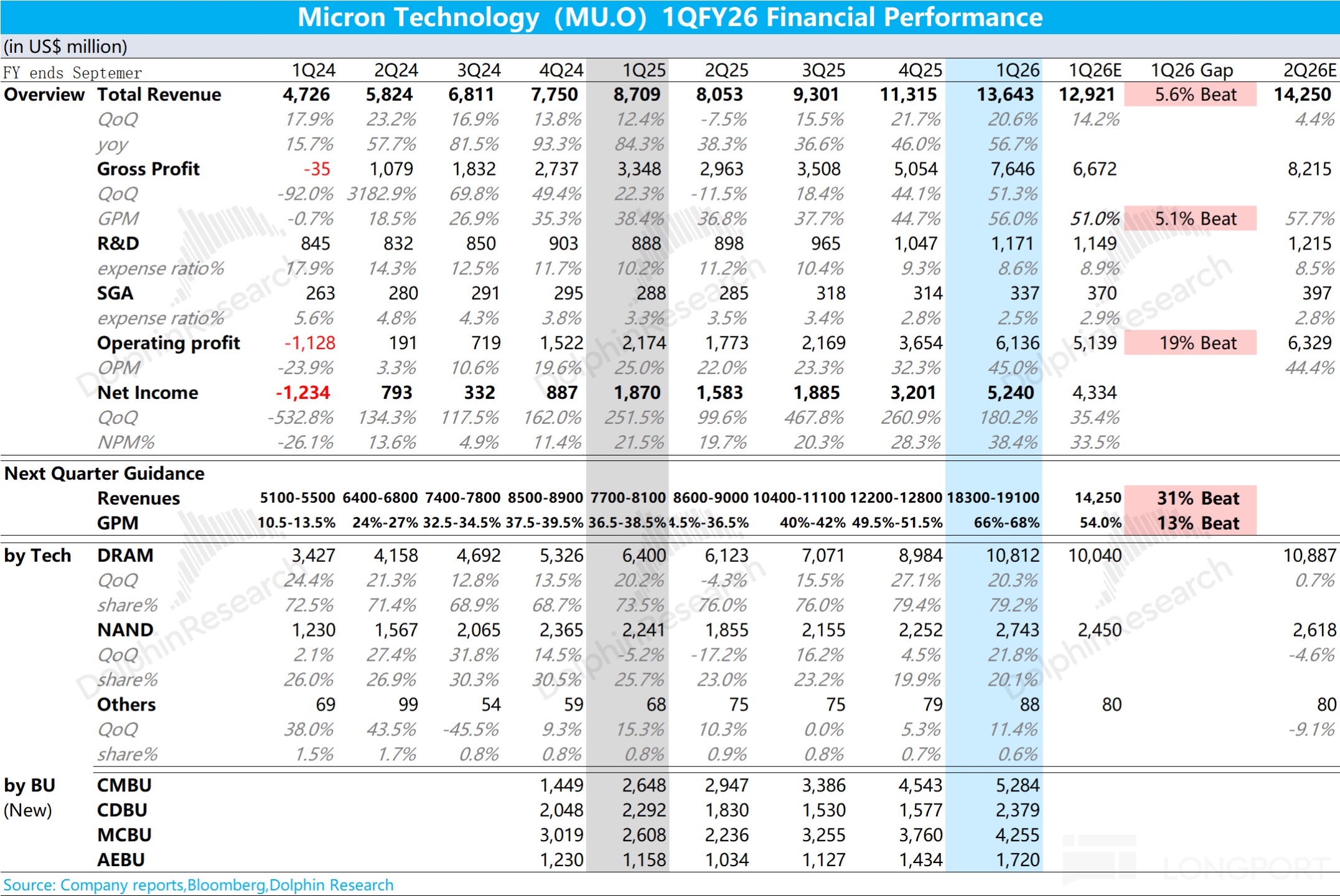

I. $Micron Tech(MU.US) Key Financials Recap

Revenue: Q1 revenue reached $13.6bn, up 21% QoQ and 57% YoY, a record high for the company.

GPM: Blended GPM was 56.8%, up 1,100bps QoQ. Pricing, cost controls, and mix drove the expansion.

EPS: Non-GAAP diluted EPS was $4.78, up 58% QoQ and 167% YoY.

FCF: A record $3.9bn for the quarter.

Segment revenue: all biz. units hit record highs.

- Cloud storage: revenue $5.3bn, GPM 66%.

- Core data center: revenue $2.4bn, GPM 51%.

- Mobile & client: revenue $4.3bn, GPM 54%.

- Auto & embedded: revenue $1.7bn, GPM 45%.

DRAM and NAND:

- DRAM revenue $10.8bn (79% of total), pricing up Approx. 20% QoQ.

- NAND revenue $2.7bn (20% of total), pricing up mid-teens % QoQ.

II. MU call details

2.1 Management highlights

1) Market outlook and cycle

- Supply-demand: Strong secular demand and constrained supply are tightening DRAM and NAND industry-wide. This tightness is expected to persist beyond 2026.

- Memory demand raised: On AI data center build plans, bit demand forecasts move higher. 2025 DRAM bit growth now ~20% (prior >15%), NAND >15% (prior 10%–15%). 2026 industry shipments for DRAM and NAND are expected to grow ~20% vs. 2025.

- Supply constraints: Total industry supply will remain materially below demand for the foreseeable future. Surging HBM demand (HBM:DDR5 at ~3:1 and expanding) intensifies tightness, and cleanroom build cycles may lengthen.

- Customer LTAs: Negotiating multi-year agreements with explicit commitments, and have agreed 2026 calendar-year HBM pricing and volumes (incl. HBM4).

2) HBM progress

TAM: HBM TAM is projected to compound at ~40% CAGR, rising from $35bn in 2025 to $100bn in 2028. This milestone is pulled forward by two years vs. prior. In 2028, HBM TAM will exceed the entire DRAM market of 2024.

Products:

- HBM4: Industry-leading >11Gbps per pin, ramping to high-yield volume production on plan in Q2 2026, aligned with customer mass production schedules.

- HBM4E: Confident in custom engagements, expanding differentiation opportunities.

- Capacity & capex: Incremental capex will lift HBM capacity. Singapore advanced HBM packaging is on track, expected to be a key contributor to the HBM supply chain in 2027.

3) Products

DRAM: Four consecutive node leads. 1-gamma is ramping steadily and will be the primary driver of 2026 DRAM bit growth, accounting for the majority of bit output in H2. Follow-on 1-delta and 1-epsilon R&D is fully underway.

NAND: Three consecutive node leads. Accelerating G9 ramp, the main driver of 2026 NAND bit growth and expected to be the largest NAND node in late FY2026. QLC mix at a record high.

4) End-market performance and outlook

Data center/AI: AI capacity expansion is driving demand for high-performance, high-capacity memory. Server units are expected to grow at a high-teens/low-20s rate in 2025, with strong demand into 2026. MU offers HBM, high-capacity server memory, and data center SSDs as differentiated solutions, with strong SSD momentum and rapid quals for the first PCIe Gen 6 SSD.

PC: Demand supported by Windows 10 sunset and AI PC. PC units are expected to grow high-single-digit % in 2025. Demand should continue in 2026, though memory supply constraints may cap some shipments.

Mobile: 2025 smartphone units expected to grow low-single-digit %. AI is lifting memory content. 1-gamma 16Gb LPDDR6 samples have shipped to leading OEMs, enabling better on-device AI.

Auto & industrial: L2+/L3 ADAS proliferation is driving strong demand. With a differentiated portfolio and share leadership, MU has secured multi-billion-dollar design-ins. Industrial demand remains solid.

5) Capex and capacity expansion

FY2026 capex is raised from $18bn to ~$20bn. The increase supports HBM capacity and 1-gamma supply for calendar 2026.

Capacity expansion:

- US: Idaho fab 1 pulled forward to mid-2027; fab 2 breaks ground in 2026, production by end-2028. New York fab 1 targets early-2026 ground-breaking, with high-volume manufacturing in 2030 and beyond.

- Japan: Expanding Hiroshima cleanroom space for advanced nodes.

- Singapore: Advanced HBM packaging to contribute in 2027.

- India: AT (assembly & test) started pilot runs, full ramp in 2026.

6) Q2 guidance

- Revenue: record $18.7bn (±$0.4bn).

- GPM: 68% (±100bps).

- EPS: record $8.42 (±$0.20).

- Opex: ~$1.38bn (±$20mn).

- ETR: ~15.5%.

2.2 Q&A

Q: On LTAs, we hear D5 and HBM, even NAND, are bundled with terms extending into 2026, and in some cases 2027–2028. Can you discuss the nature of these agreements?

A: We are discussing multi-year contracts with several key customers across DRAM and NAND. These differ meaningfully from prior LTAs, with explicit commitments and stronger structures. Beyond that, we cannot share more now.We will provide details when appropriate.

Q: Capex is up to $20bn, but no full-year revenue guide, so cap intensity looks ~25%–30%, below your usual 35% lens. Is that due to factory space constraints, and does intensity move closer to 35% next fiscal year?

A: Correct, we did not guide full-year revenue. Most of the capex increase supports DRAM, especially HBM and 1-gamma capacity. From 2025 to 2026, buildings capex roughly doubles, and we expect higher capex in 2027.That said, we will remain disciplined, aligning bit supply with demand and improving capex efficiency. With favorable market conditions, capital intensity is trending lower.

Q: The capex increase still seems conservative, likely reflecting cleanroom constraints, and you are not significantly accelerating CR builds. Is this caution on capacity adds?

A: Tightness has persisted for multiple quarters, and node transitions are the main source of FY2026 supply growth. HBM demand exacerbates shortages across the industry, so we are accelerating tool orders and fab builds, investing in existing and new capacity (Idaho, New York).Given a large supply-demand gap, we can only fulfill ~50% to two-thirds of key customer demand, so we are working to increase supply as fast as possible.

Q: How should we think about DRAM and NAND cost reductions into calendar 2026, and any temporary cost upticks when moving from HBM3E to HBM4 due to yields?

A: Cost execution in DRAM and NAND remains strong, with scale benefits, tight spend controls, and solid yields. New sites have startup costs that begin in H2 2026 into 2027, but given scale, GPM impact should be modest.We are not providing cost guides for the rest of the year as mix and other factors matter. As noted, 1-gamma DRAM and G9 NAND ramps are progressing well and will be tailwinds as they hit volume.

On HBM3E vs. HBM4, we will increase HBM4 output around Q2 to meet demand. HBM4 is performing very well at >11Gbps per pin, and we are pleased with yield improvements, which we expect to outpace HBM3E.2026 HBM mix across HBM3E and HBM4 will be driven by customer demand, and both will be meaningful in 2026 revenue.

Q: With AI chip project forecasts for next year raised and continued use of HBM3E, are you seeing that in orders? Given 2026 is sold out and demand could rise further, how will you manage incremental HBM3E needs vs. strong HBM4 demand?

A: We will offer both HBM3E and HBM4 in 2026 and are engaged across multiple customers in the ecosystem. Both will drive strong YoY HBM revenue growth in 2026, and we will manage mix by customer needs.HBM and non-HBM DRAM will remain tight, as noted. We lifted our HBM outlook, with TAM now $100bn by 2028, two years earlier than before, as AI platforms increasingly require more HBM.

Q: Enterprise SSD posted strong QoQ growth. With demand up and lead times extending, are you also signing LTAs with enterprise SSD customers? Is SSD demand more tied to inference workloads?

A: We are proud of the enterprise SSD team. Enterprise SSDs are a key part of our data center portfolio, and we will keep focusing on share gains with a strong roadmap, deep customer engagement, and high product quality.Multi-year contracts under discussion with key customers also include data center SSDs, spanning multiple segments beyond pure DC customers.

On inference, as GenAI expands into video, SSD demand is indeed rising. Rapid shifts from training to inference and fast-evolving models and apps are driving stronger enterprise SSD growth, clearly propelled by GenAI.

Q: Can you share HBM as a % of DRAM in dollar terms, and your competitive stance next year vs. rivals catching up on HBM3?

A: We are very confident in our HBM4 competitiveness, with industry-leading performance >11Gbps per pin and excellent power. Our HBM3E delivers 30% lower power than any competitor, maintaining that advantage.

We achieved HBM share in line with our DRAM share in Q3. In a tight supply environment we will manage HBM vs. non-HBM mix. With HBM TAM now expected at $100bn by 2028, two years earlier than prior, our 2026 HBM business should post strong YoY growth.The supply-demand gap across DRAM, including HBM, is unprecedented, and we will keep adding supply to better serve customers.

Q: On the previously mentioned ~$8bn full-year HBM revenue, with TAM raised, can you detail HBM contribution in the Nov. quarter and expectations for next quarter?

A: We are not providing line-item detail here. We highlighted record HBM revenue in FQ1. Beyond that we will not break out revenue specifics.To reiterate, HBM revenue will post strong YoY growth in 2026, and our positioning is highly favorable for overall mix management.

Q: Post the Feb. quarter, how should we think about GPM into the May quarter — continue up or hold?

A: No GPM guide beyond Q2. We guided a record 68% in Q2, up 1,100bps QoQ and 700bps above our prior peak.We indicated the business strengthens through the year, so we believe DRAM and NAND margins can move higher. Note that at such high GPM levels, identical price increases yield smaller percentage gains.

Thus, we expect GPM to keep expanding after FQ2, but at a slower pace than in recent quarters or our Q1–Q2 guide. We see a constructive environment through the year, strong cost execution, and allocation of supply to the highest-value parts of the market to best serve customers.

Q: With HBM TAM reaching $100bn by 2028 at ~40% CAGR, how should we think about MU's share — nearer 20% or higher?

A: We will not specify share. We will manage HBM vs. non-HBM as we do across the portfolio, balancing roadmap strength, strategy, and customer relationships.Given constructive fundamentals, portfolio tailwinds, and rising memory value across HBM and non-HBM in data center and beyond, we are well positioned for the mid to long term.

Q: On the LTAs under negotiation, timing to sign, and any hurdles? With AI players seeking large capacity, can they participate in new fab builds?

A: We will not disclose specifics. Key customers are concerned about securing long-term memory in this environment, which is driving constructive discussions on supply and other explicit commitments in these multi-year structures that are stronger than prior agreements.We must weigh total supply capacity as we prioritize strategic relationships. As noted, in the mid term we can meet only about half to two-thirds of several key customers' needs, which must be reflected in LTA discussions.

Q: Given HBM demand strength and 2026 sold out, are prices locked, or do they float with demand intensity as with DDR5?

A: We are pleased with our product positioning and customer engagement. As noted in prepared remarks, we have finalized 2026 HBM quantities and pricing with customers.HBM profitability is strong with strict ROIC focus, and non-HBM profitability is healthy as reflected in results and guidance.

Q: Outside DC/AI, when do memory price increases impact electronics demand? Any elasticity in 2026 for consumer and traditional enterprise, and how might that affect next year's memory pricing?

A: We noted some consumer markets may see unit demand affected by memory pricing. Some customers, such as in smartphones and PCs, may adjust product mix given available supply, which is embedded in our outlook.Even so, we still see a large supply-demand gap and a very tight supply environment.

Importantly, AI from data center to edge devices (smartphones, PCs, etc.) requires more memory. Without sufficient memory, AI features and performance on edge devices suffer.In short, AI from cloud to edge is driving higher memory content and demand, and is why customers seek multi-year supply assurance with us.

Risk disclosure and statements: Dolphin Research Disclaimer & General Disclosure